The intention of this post is to explain how to deploy a Linux Container Workload at Azure Kubernetes Service

1. Introduction

This post explains how to deploy a Linux Container Workload at the Azure Kubernetes Service. The corresponding Container Image is stored and managed by an Azure Container Registry. The deployment will be conducted by adding the dedicated YAML code. This leads to a running Linux Container. The workload will terminate after two minutes. A similar post of conducting a workload at an Azure Kubernetes Service already exists - in contrast to this posts, it’s about deploying a Windows Container Workload.

Azure - Kubernetes Service: How to deploy a Windows Container Workload

2. Linux Container and Container Management

2.1 Explanation of the Container related files

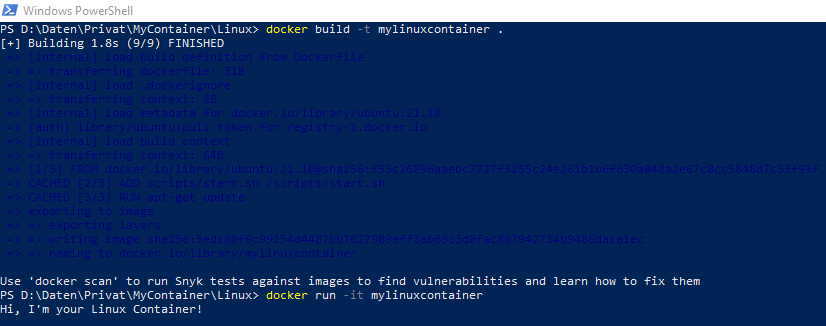

I’m using a simple Linux Dockerfile for demonstrating the deployment of the corresponding workload. In this section the Dockerfile of the Container is explained. It is derived from a Ubuntu 21.10 image (see first line), the next line adds a Bash Script from the local directory “scripts” to the file system of the Container, also inside a “scripts” directory. The third line runs a apt-get update command, for updating the package list (which is not meaningful in that example, as no package will be installed, but it fits to mention this popular Docker command ;)). The final line defines the mentioned Bash script as Entrypoint of the Container.

FROM ubuntu:21.10

ADD "scripts/start.sh" "/scripts/start.sh"

RUN apt-get update

ENTRYPOINT "/scripts/start.sh"

The Bash file includes a simple “echo” command, after that the script forces the Container to sleep for 2 minutes before the termination is done with the “exit” command.

#!/bin/sh

echo "Hi, I'm your Linux Container!"

sleep 2m

exit

So, after building and running the Container, it reveals as output “Hi, I’m your Linux Container” and after two minutes, the Container terminates.

All Container related files can be downloaded from my GitHub account:

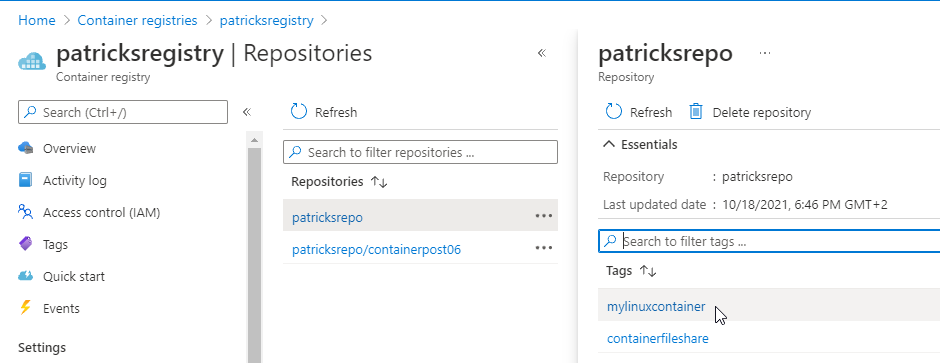

2.2 Azure Container Registry - Management of the Container Image

The Azure Kubernetes Service needs to access the Container Image in some way, therefore it is uploaded to a Container Registry. I’ve choosen an Azure Container Registry, named “patricksregistry” which I’ve set up on my own for managing the Container Image. The mentioned Container Image is named “mylinuxcontainer”, and available within “patricksrepo”, which can be seen in the picture below.

Deploying the Linux Container Workload

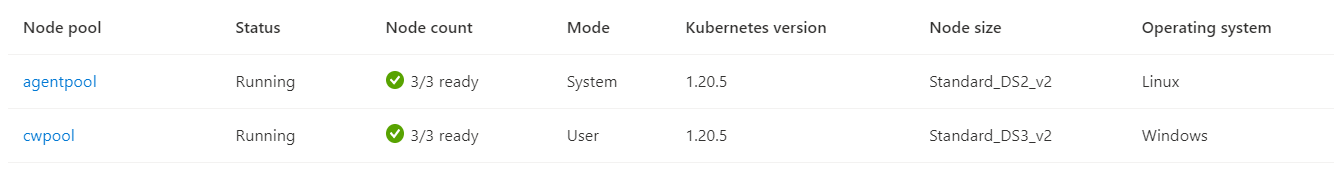

3.1 The Azure Kubernetes Service

The AKS, which I’m using for the deployment is capable of conducting Linux and Windows Container Workloads - but in that case, of course just the Linux Node pool “agentpool” would be necessary. So, one of the three available Nodes will be chosen for hosting the corresponding Pod and the Container.

3.2 The Deployment of the Workload

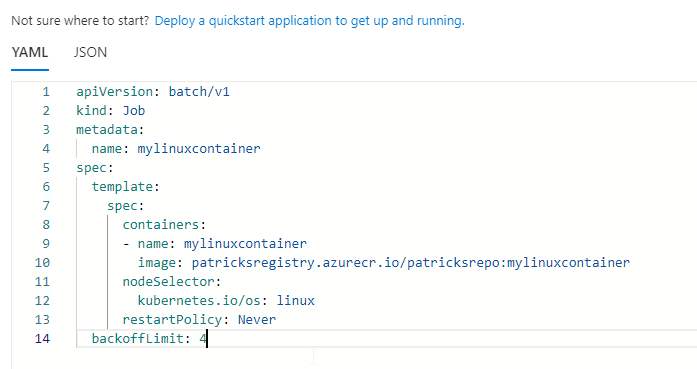

The deployment is done by adding YAML code, which defines the workload:

Important parts of the YAML code to notice:

- The workload is from type “Job”

- The name of the Container is set to “mylinuxcontainer”

- The image refers to the mentioned Azure Container Registry “patricksregistry”

- The node selector is defined to choose a node from the OS type “linux”.

apiVersion: batch/v1

kind: Job

metadata:

name: mylinuxcontainer

spec:

template:

spec:

containers:

- name: mylinuxcontainer

image: patricksregistry.azurecr.io/patricksrepo:mylinuxcontainer

nodeSelector:

kubernetes.io/os: linux

restartPolicy: Never

backoffLimit: 4

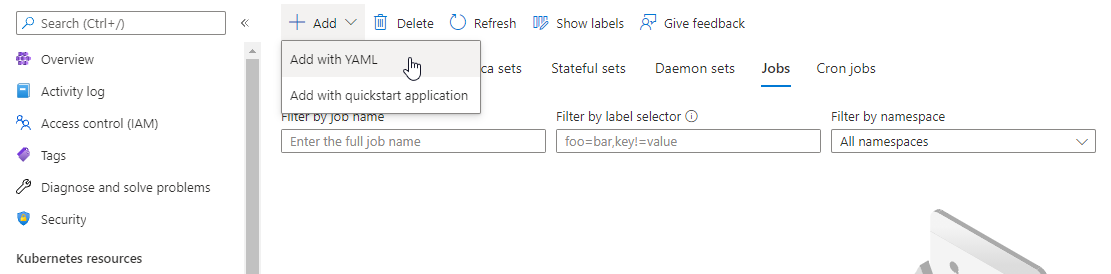

Now, I’m going to trigger the deployment of this workload at the Azure Kubernetes Service by adding the YAML code…

…inserting it…

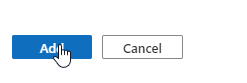

…and pushing the button…

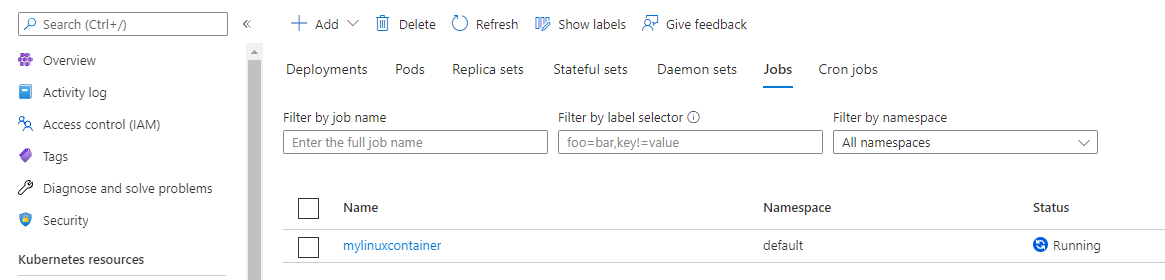

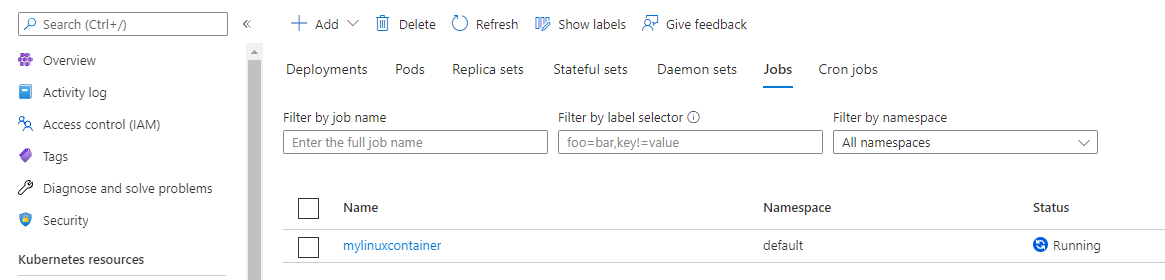

This leads to a running workload. If you click at “mylinuxcontainer”, you’ll be linked to the corresponding Pod.

The Pod gets the state “running”, if the Container Image is pulled successfully from the Container Registry, which is the Azure Container Registry with the name “patricksregistry”.

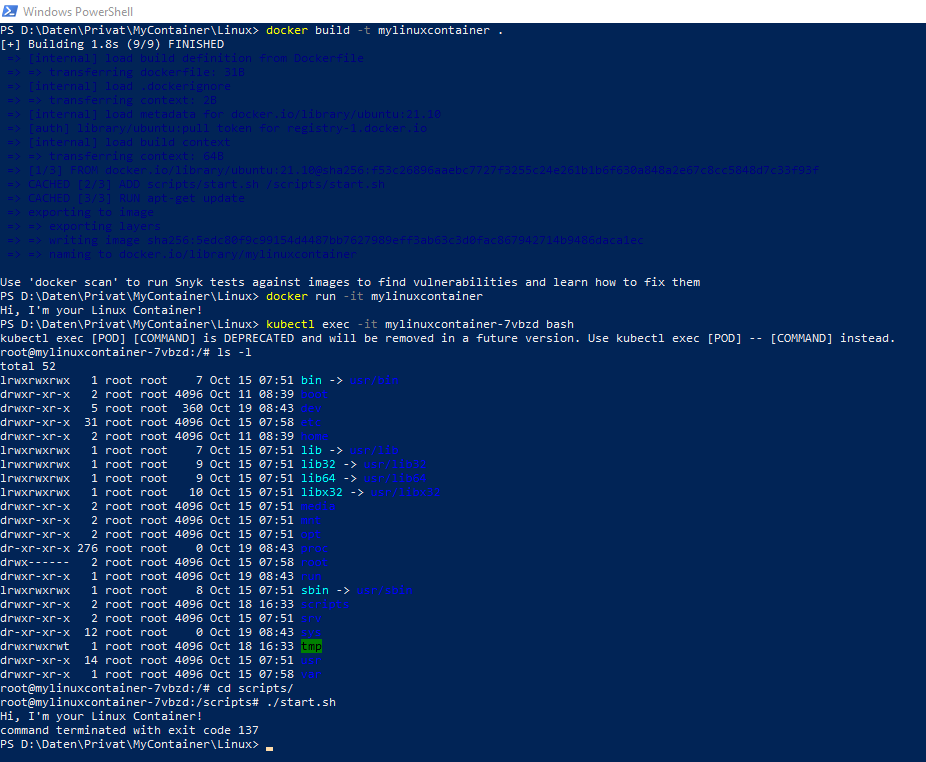

You can access the Pod, while it’s running - for instance by using the dedicated “kubectl” command:

kubectl exec -it mylinuxcontainer-7vbzd bash

By applying this command, you enter the “mylinuxcontainer-7vbzd” instance. Changing the directory to “scripts” and executing “./start.sh” results to the output “Hi, I’m your Linux Container!”

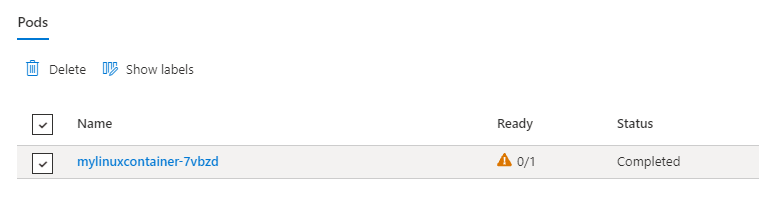

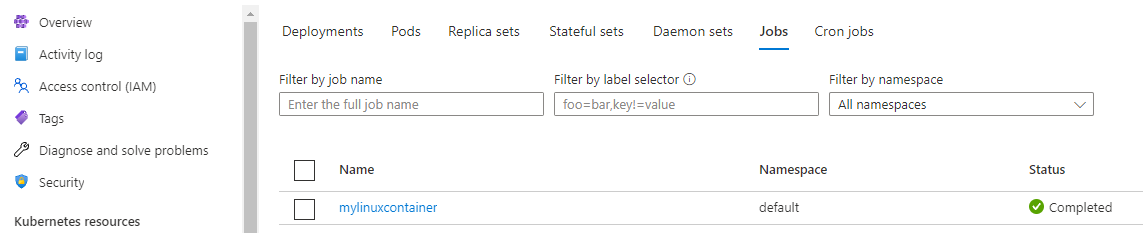

The exit code reveals that the termination was done, this can also be observed in the Azure Kubernetes Service:

The Pod “mylinuxcontainer-7vbzd” and the related workload “mylinuxcontainer” is completed.

4. Conclusion

Deploying Linux Container Workloads at the Azure Kubernetes Service is quite easy. Either deploy it by “Add with YAML” or use e.g.: the dedicated kubectl apply command. Linux based Container are small in the size and rather fast by running it, so it really makes fun to use Linux Container Workloads. The Linux Node Pool is also available by default at the Azure Kubernetes Service, therefore using Linux Containers seems to be expected ;).

References

Microsoft - Azure Kubernetes Service (AKS)

Microsoft - Docs: Can I run Windows only clusters in AKS?

Kubernetes.io - Workloads: Job