The intention of this post is to explain, how to add a Windows node pool to an Azure Kubernetes Cluster, which was provisioned with Terraform

1. Introduction

In this post, I’d like to show how you can upgrade your Azure Kubernetes Cluster, which was provisioned with a Terraform configuration, with a Windows node. There might be reasons why you need to run Windows workloads and therefore your Kubernetes cluster must be capable of scheduling and hosting them.

In this example, I’ll start with the provisioning of a “default” Kubernetes cluster with Terraform - so the cluster just contains the default Linux node pool. After that, I’ll show how to upgrade it with a Windows node pool.

2. Provisioning a “default” Azure Kubernetes Cluster with Terraform

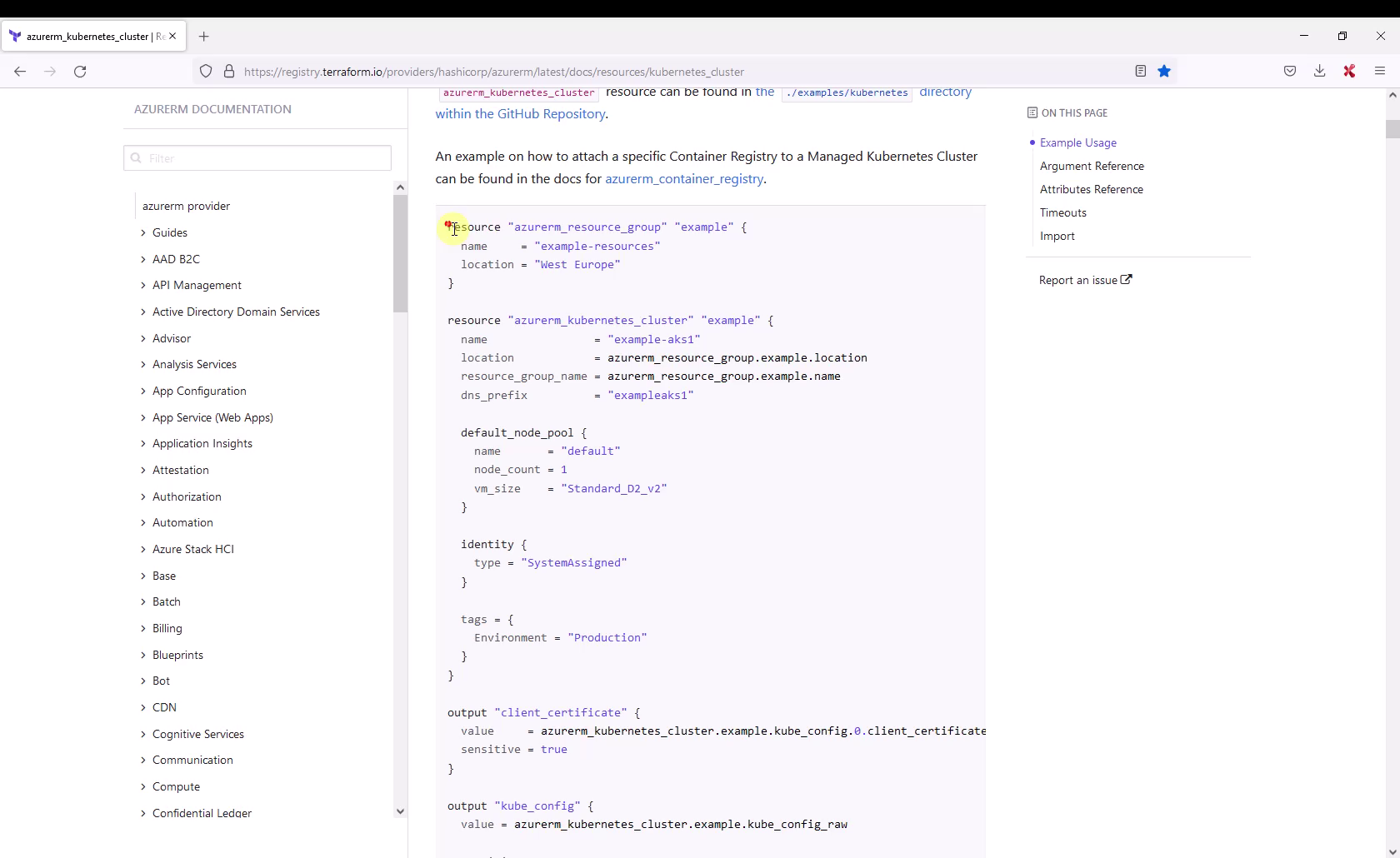

For provisioning an Azure Kubernetes cluster, I’ll use the official azurerm documentation template of Terraform, which can be found here:

Terraform - Azurerm - azurerm_kubernetes_cluster

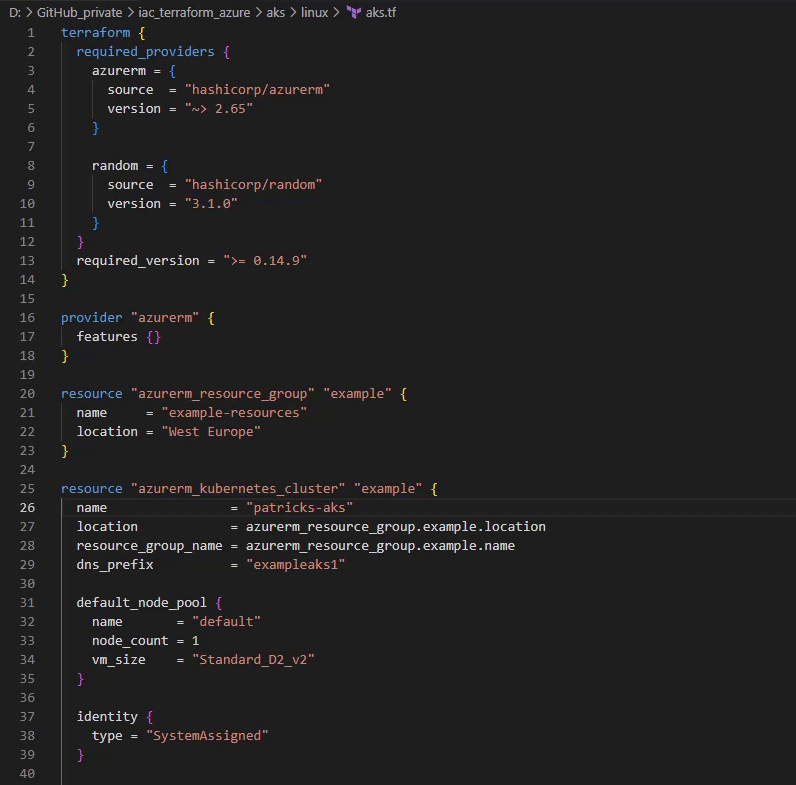

Now I’m going to copy the example, provided at the link above, in a new file of my Visual Studio Code.

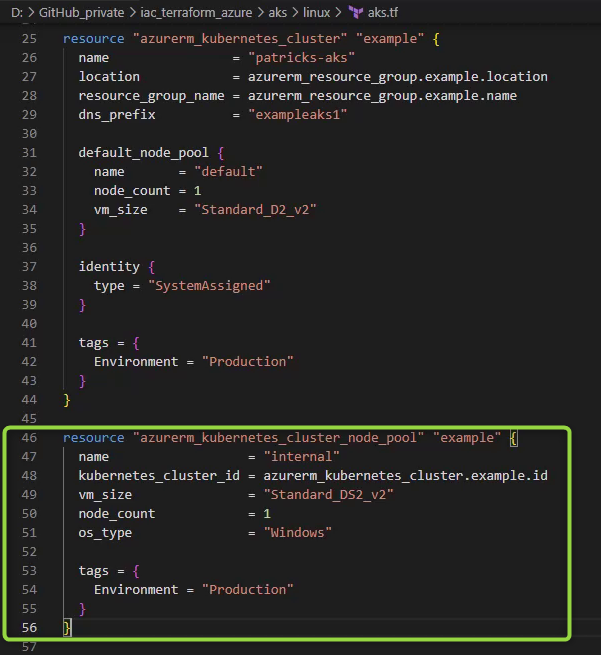

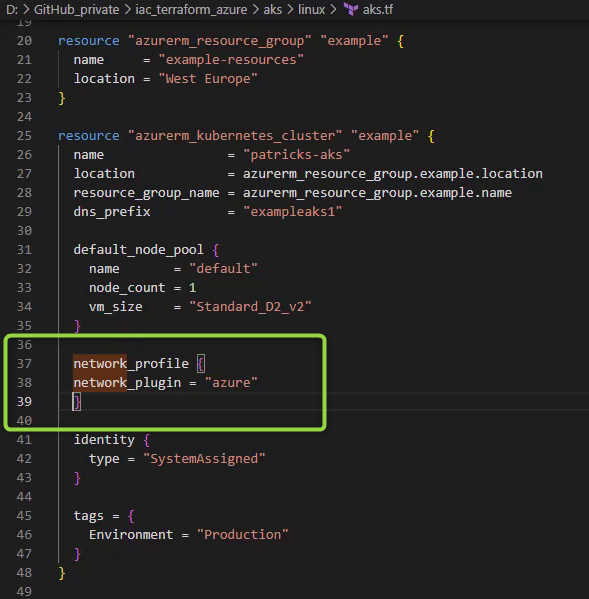

Be aware, that the lines 1-18 are not included in the official example - I’ve prepared them already in my Terraform configuration named “aks.tf”. The lines 1-18 include the “Terraform Block”, which contains the dedicated Terraform settings and the “Provider”, which is azurerm - as I’d like to provision the resource in Azure. So, the full configuration - which is ready to be provisioned - can be seen in the picture below. Take a look at lines 31-35: this “resource” block refers to the default_node_pool, which will contain the Linux node pool only. I’ll also rename the cluster to “patricks-aks” (see line 26):

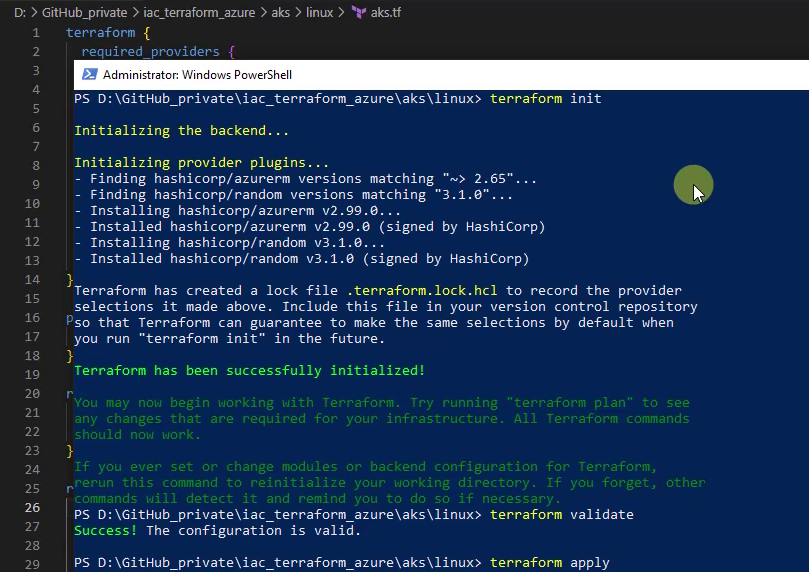

Let’s prove, that this example is valid and verify that the cluster can be provisioned using the dedicated Terraform commands. Therefore I’ll apply following Terraform commands:

- init

- validate

- apply

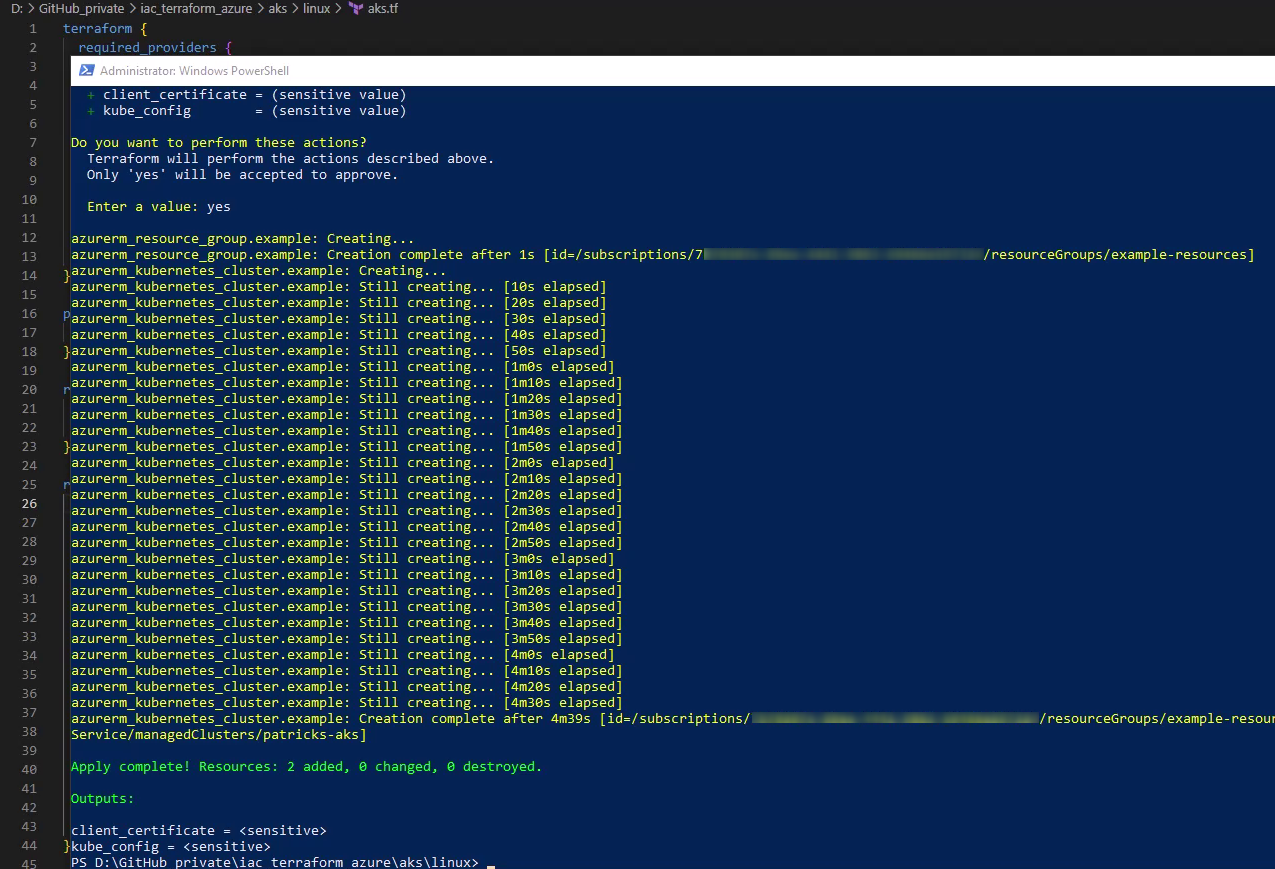

After running the commands, the logs should be similar as shown in the picture below. So, the “apply” command is complete by adding two resources:

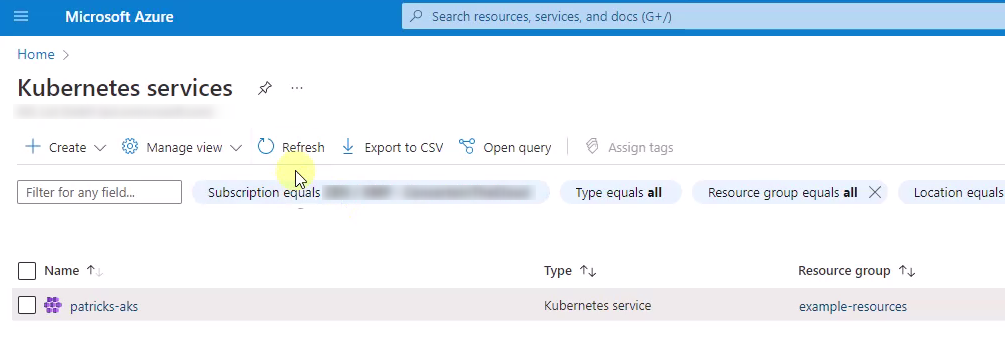

Switching to the Azure Portal reveals that a new Kubernetes service instance (named “patricks-aks”) was created:

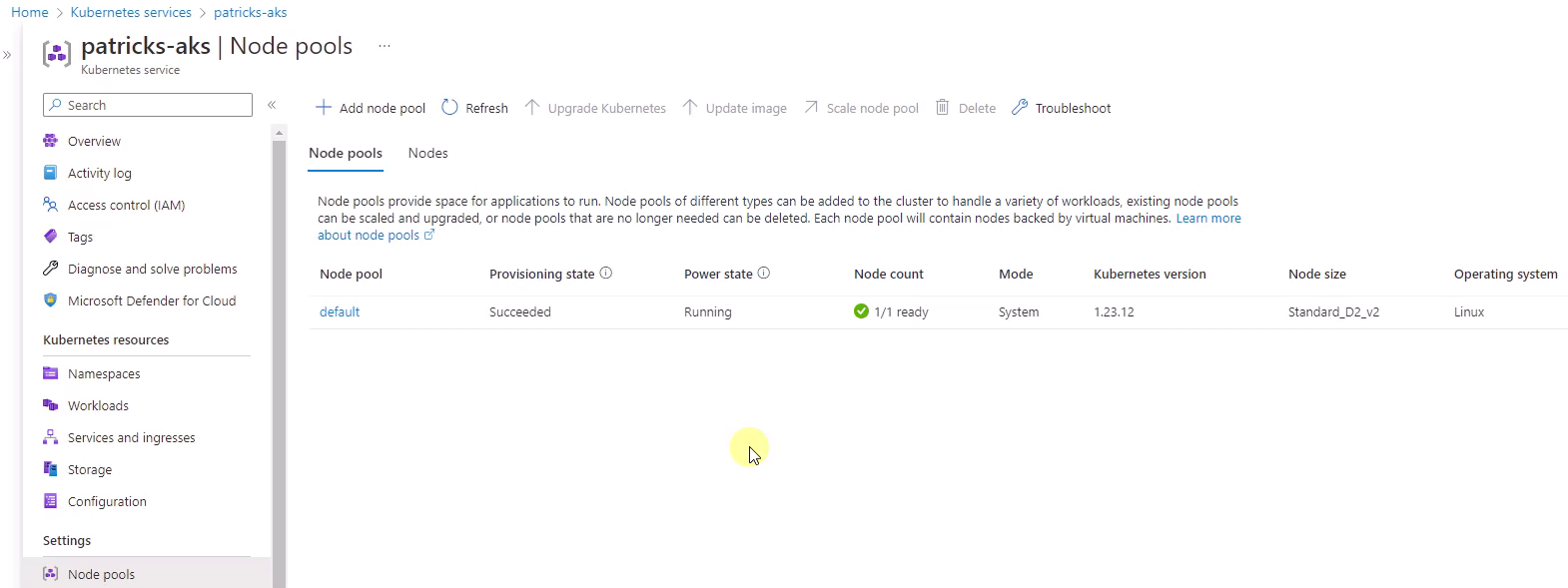

Selecting “patricks-aks” and “Node pools” afterwards at the “Settings” section shows, that (as expected) just the “default” node pool was created:

3. Adding resource blocks and statements to upgrade the Azure Kubernetes Cluster with a Windows Node Pool

So this worked as expected. The next step is to add the necessary “resource” blocks, respectively statements to create the desired Windows node pool.

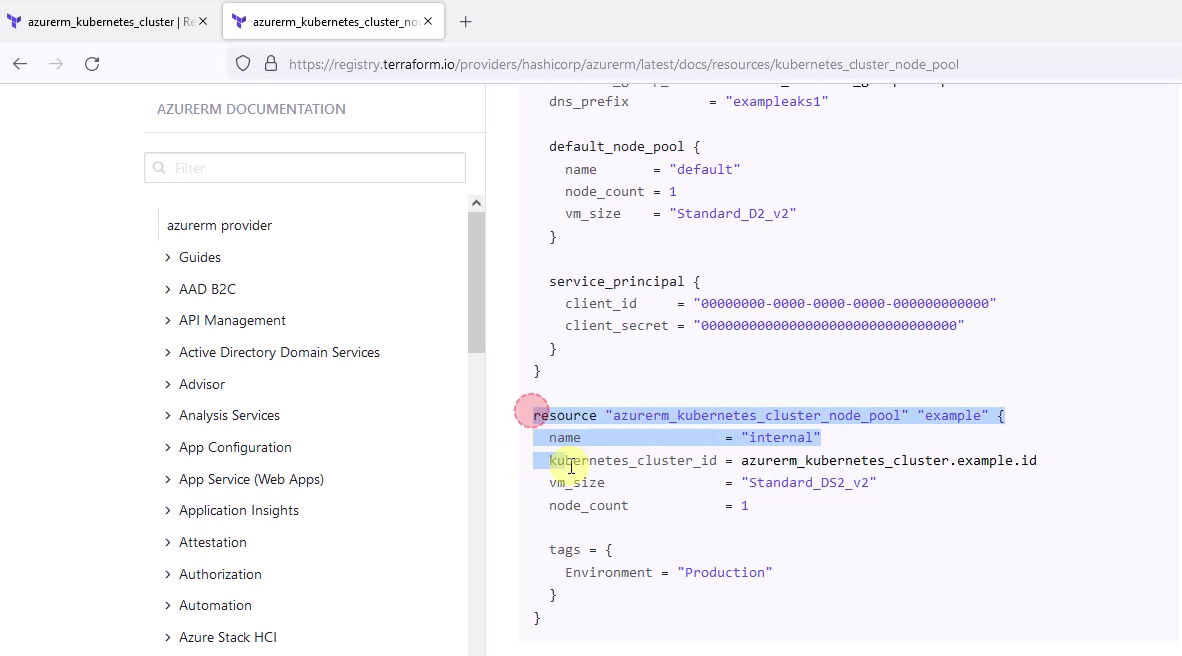

For that, I’ll again use provided “resource” blocks from an official template at following link:

Terraform - Azurerm - azurerm_kubernetes_cluster_node_pool

At this page, I’m going to copy the resource block from the type “azurerm_kubernetes_cluster_node_pool”:

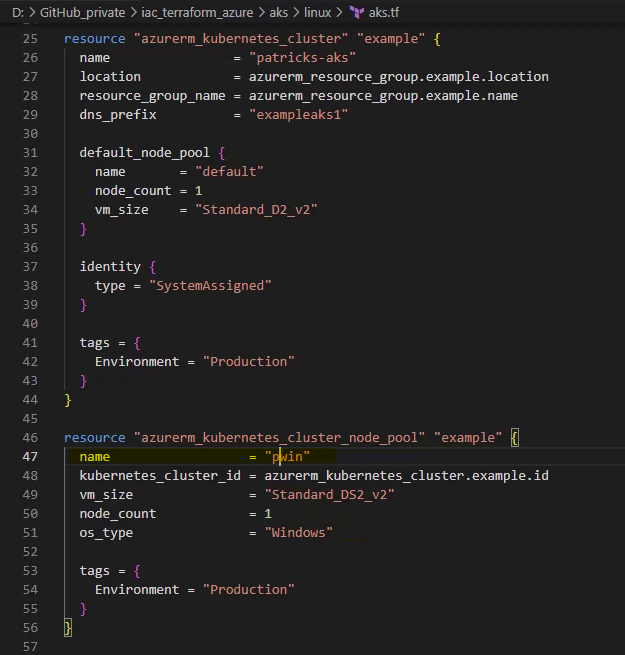

The already existing configuration is now simply extended by this resource block. Just a single statement (see below) has to be inserted in line 51, as I’d like to define that node pool as a Windows node pool.

os_type = "Windows"

In theory, the configuration should be complete. Therefore I’ll again apply the configuration by conducting the proper Terraform command:

terraform apply

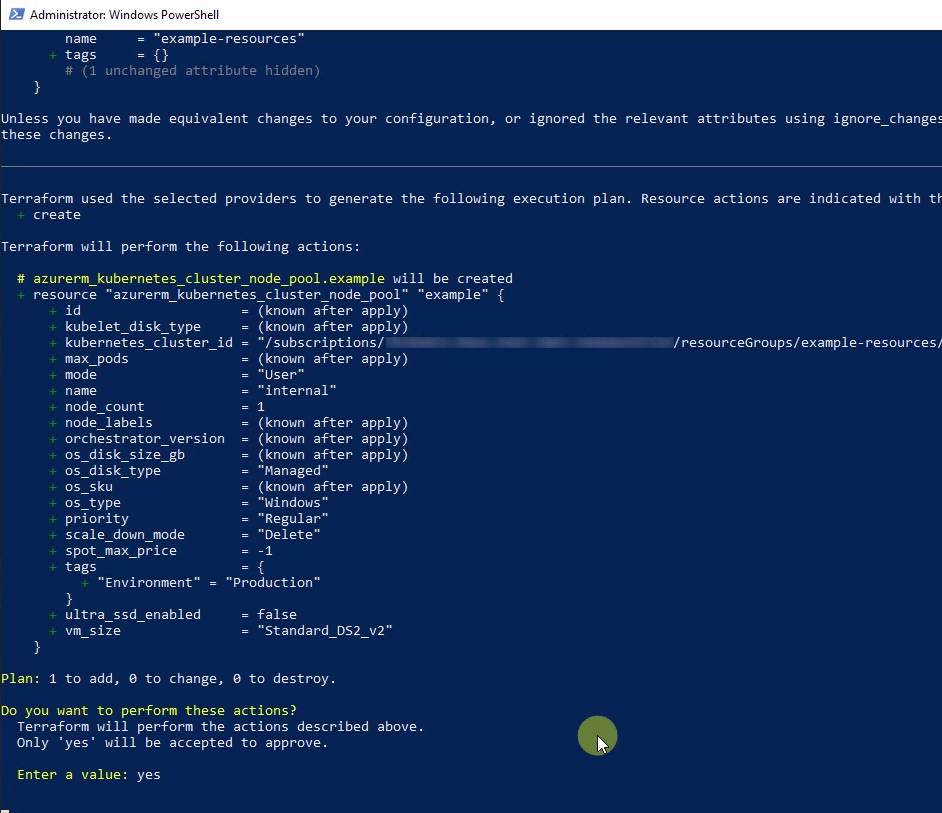

There is no need to destroy the Kubernetes cluster before. Terraform will recognize the change in the configuration. Because of that, the logs will report that just one item has to be added - that’s the newly inserted resource block of the node pool.

But the logs also report a problem: names of Windows agent pools are not allowed to have more thant 6 characters:

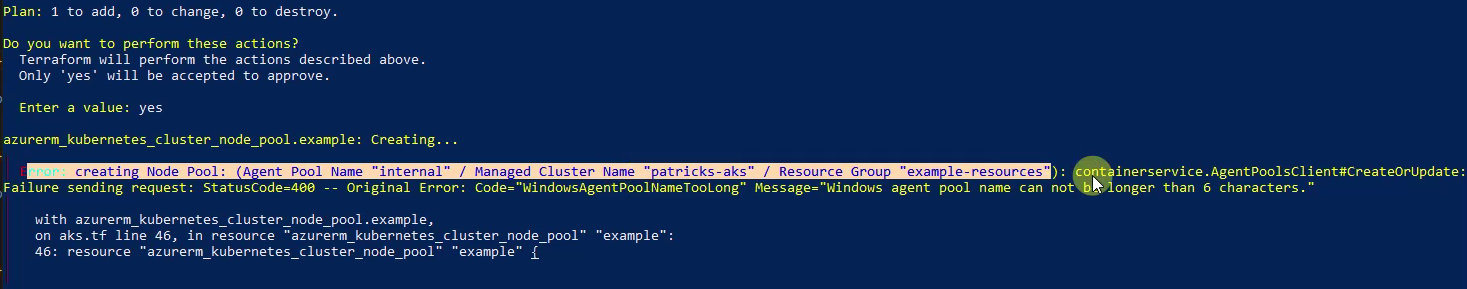

That’s simply to fix: I’ll rename the node pool in line 47 to “pwin”:

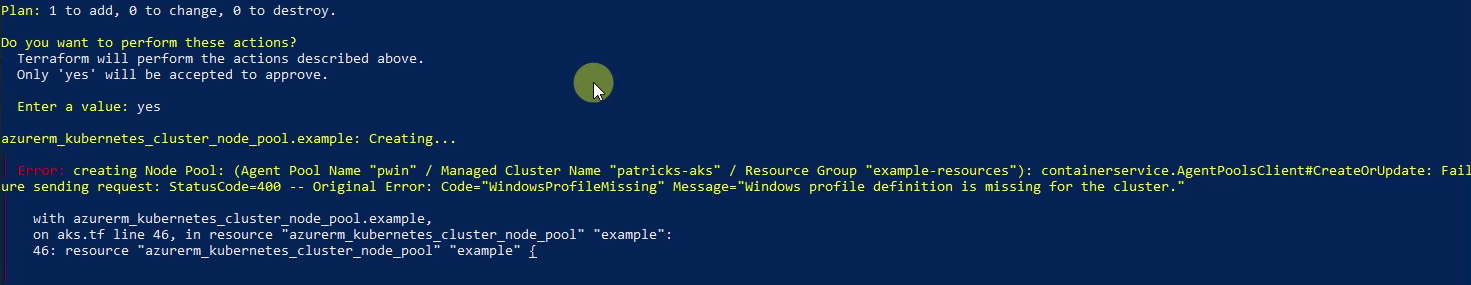

Next try: again I’ll trigger the new provisioning with applying “terraform apply”…but again it does not succeed. This time, the logs report that a Windows profile is missing in the cluster:

How to solve that? Let’s ask a search engine and one of the results refers to a Stackoverflow entry.

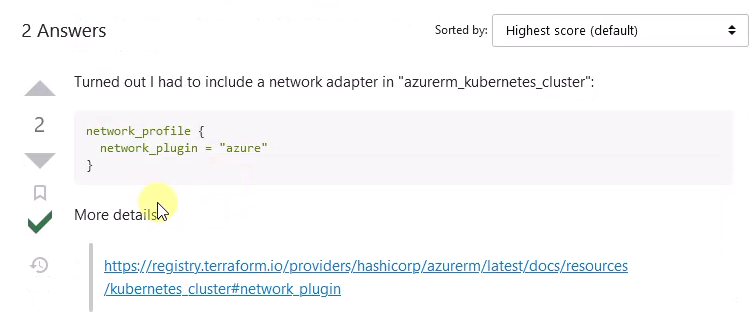

Stackoverflow gives me the answer - so kudos to the user “Milad Ghafoori”:

see stackoverflow.com - windows-agent-pools-can-only-be-added-to-aks-clusters-using-azure-cni

I’m copying that snippet and insert it in the resource block of the “azurerm_kubernetes_cluster”:

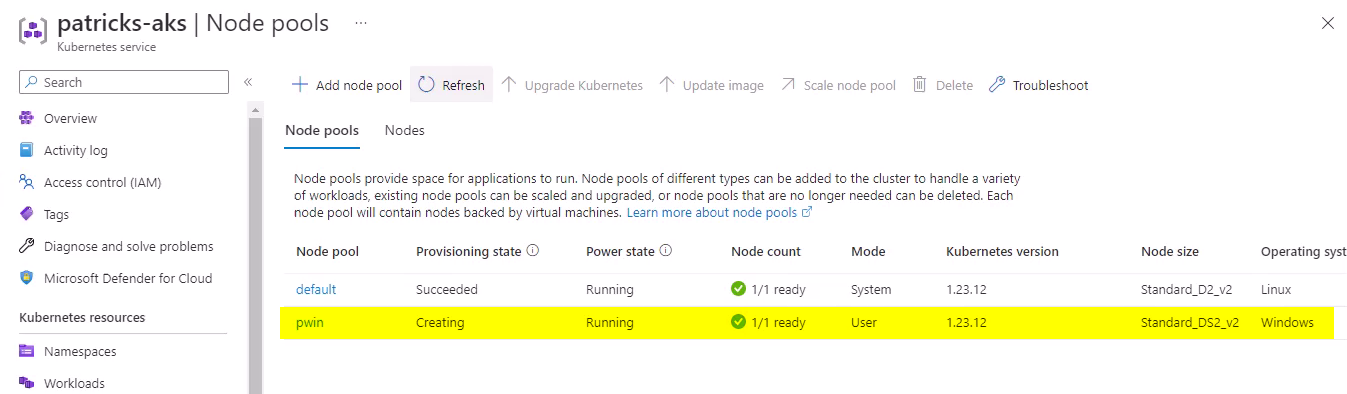

Finally, the Terraform configuration should be complete. Applying that configuration leads to the creation of an Azure Kubernetes cluster, which also contains a Windows node pool, named “pwin” in my example:

Now, this cluster is also capable of hosting Windows workloads.

The final Terraform configuration can be found in the code snippet below or at my github repository (see link below):

github.com - patkoch - iac_terraform_azure: aks windows

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~> 2.65"

}

random = {

source = "hashicorp/random"

version = "3.1.0"

}

}

required_version = ">= 0.14.9"

}

provider "azurerm" {

features {}

}

resource "azurerm_resource_group" "example" {

name = "example-resources"

location = "West Europe"

}

resource "azurerm_kubernetes_cluster" "example" {

name = "patricks-aks"

location = azurerm_resource_group.example.location

resource_group_name = azurerm_resource_group.example.name

dns_prefix = "exampleaks1"

default_node_pool {

name = "default"

node_count = 1

vm_size = "Standard_D2_v2"

}

network_profile {

network_plugin = "azure"

}

identity {

type = "SystemAssigned"

}

tags = {

Environment = "Production"

}

}

resource "azurerm_kubernetes_cluster_node_pool" "example" {

name = "pwin"

kubernetes_cluster_id = azurerm_kubernetes_cluster.example.id

vm_size = "Standard_DS2_v2"

node_count = 1

os_type = "Windows"

tags = {

Environment = "Production"

}

}

output "client_certificate" {

value = azurerm_kubernetes_cluster.example.kube_config.0.client_certificate

sensitive = true

}

output "kube_config" {

value = azurerm_kubernetes_cluster.example.kube_config_raw

sensitive = true

}

4. Conclusion

A dedicated template for an Azure Kubernetes cluster can be found easily in the official documentation. If you’d like to upgrade it with Windows nodes, then you’ll face a few obstacles:

- don’t forget to declare the operating system type of the newly added node pool

- be aware of the restriction of agent pool name

- take care to add a network adapter in the Kubernetes cluster

If those items are considered, then your Azure Kubernetes cluster is quickly upgraded with Windows nodes.

References

Terraform - Azurerm - azurerm_kubernetes_cluster

Terraform - Azurerm - azurerm_kubernetes_cluster_node_pool

stackoverflow.com - windows-agent-pools-can-only-be-added-to-aks-clusters-using-azure-cni