This post explains how to provision an Azure Open AI service, including a GPT-4 deployment, and how to access it using the REST API

1. Introduction

When thinking about artificial intelligence, the first thing that probably comes to mind are the language models that people love to use in the hope of getting a quick and precise answer to their questions or problems. In this blog post, I would like to describe in detail how to deploy the underlying infrastructure with Terraform in Azure to create your own Azure OpenAI service with a GPT-4 deployment. Furthermore, I will also explain how to use the corresponding REST interface to provide prompts to the model that should lead to high-quality responses.

I would recommend the following links as further reading to delve into the topics of Infrastructure as Code and the Azure Open AI service:

Be aware to use the AI services responsibly! Please also read that carefully:

learn.microsoft.com - Responsible use of AI overview

2. Prerequisistes

To replicate this example, you need the following prerequisites:

- Azure subscription: you need your own Azure subscription, in which you can deploy the related resources.

- Azure CLI: will be used to login to your Azure subscription before applying the Terraform commands.

- Terraform: will be used to run several commands in a terminal to provision the resources in an automated way

- Python: to conduct prompts using the REST API

3. Preparing the environment

3.1 Clone the repository

Clone the repository using the link below in a directory of your choice:

github.com/patkoch - terraform-azure-cognitive-deployment

3.2 Login to the Azure subscription using the Azure CLI

Start a terminal of your choice and enter the following Azure CLI command to login:

az login

After that, a browser session will be started. Enter your personal credentials to complete the login to your Azure subscription.

3.3 Parameterize the Terraform configuration

The deployment, which is explained here, refers to the Azure region “West Europe” and uses the language model “gpt-4” with the version “turbo-2024-04-09”.

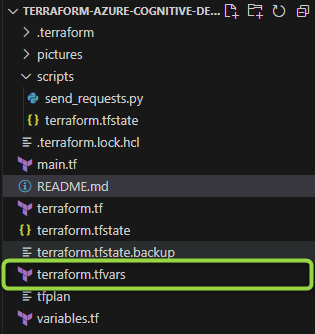

I will use a terraform.tfvars file to parameterize the Terraform configuration. The content of the file can be seen in the snippet below:

subscription_id = "<add your subscription id here>"

resource_group_name = "open-ai-test-west-europe-rg"

resource_group_location = "West Europe"

cognitive_account_name = "open-ai-test-west-europe-ca"

cognitive_account_kind = "OpenAI"

cognitive_account_sku_name = "S0"

cognitive_deployment_name = "open-ai-test-west-europe-cd"

cognitive_deployment_model_format = "OpenAI"

cognitive_deployment_model_name = "gpt-4"

cognitive_deployment_model_version = "turbo-2024-04-09"

cognitive_deployment_sku_name = "GlobalStandard"

cognitive_deployment_sku_capacity = 45

Add your Azure subscription in the first line.

If you need to determine the subscription id, then run the following Azure CLI command:

az account show

After that, save the file side by side to the files “terraform.tf”, “variables.tf” and the “main.tf”:

4. Deployment of the Azure OpenAI Service and the GPT-4 Model using Terraform

Start a new terminal (or stick to your already opened one that you used to login to your Azure subscription), and change the directory to the path of the checked out repository.

After that, run the following four Terraform commands:

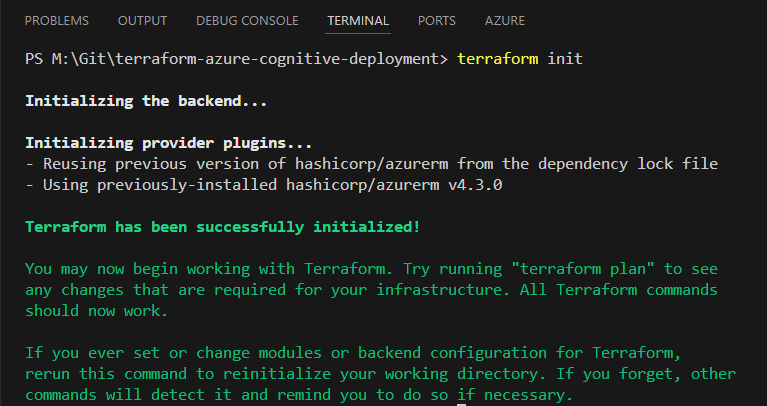

4.1 Terraform init

That is the very first Terraform command, which you can run after cloning the repository. Running that command initializes the directory containing the corresponding Terraform files - in that case the directory including the “terraform.tf”, “variables.tf” and the “main.tf”.

terraform init

Reference: developer.hashicorp.com - Terraform CLI commands - init

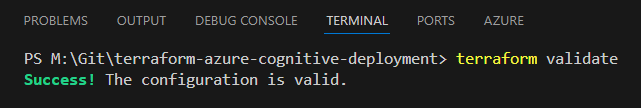

4.2 Terraform validate

This command verifies whether the Terraform files, which form the configuration, are syntactically valid and internally consistent.

terraform validate

Reference: developer.hashicorp.com - Terraform CLI commands - validate

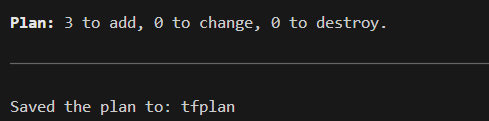

4.3 Terraform plan

Results in creating an execution plan, which will be saved in that case as a plan file and provides an overview of the changes that will be conducted. There will not be a provisioning nor a change nor a destruction of any resource in Azure after running that command.

terraform plan -out tfplan

Reference: developer.hashicorp.com - Terraform CLI commands - plan

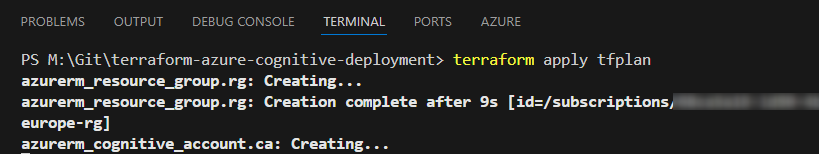

4.4 Terraform apply

This command executes the content of the provided plan file: “tfplan”.

terraform apply tfplan

Reference: developer.hashicorp.com - Terraform CLI commands - apply

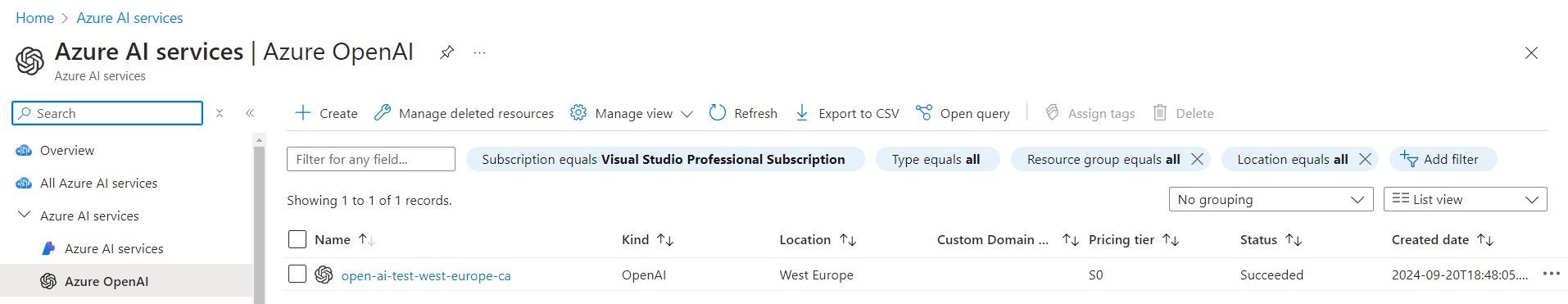

This finally deploys the Azure OpenAI service named “open-ai-test-west-europe-ca”:

and the “gpt-4” deployment “open-ai-test-west-europe-cd”:

In case the deployment does not fit you, then you have to change the following values:

cognitive_deployment_model_format = "OpenAI"

cognitive_deployment_model_name = "gpt-4"

cognitive_deployment_model_version = "turbo-2024-04-09"

cognitive_deployment_sku_name = "GlobalStandard"

The following link provides an overview of the available models:

learn.microsoft.com - AI Services - Models

5. Providing prompts using the REST API

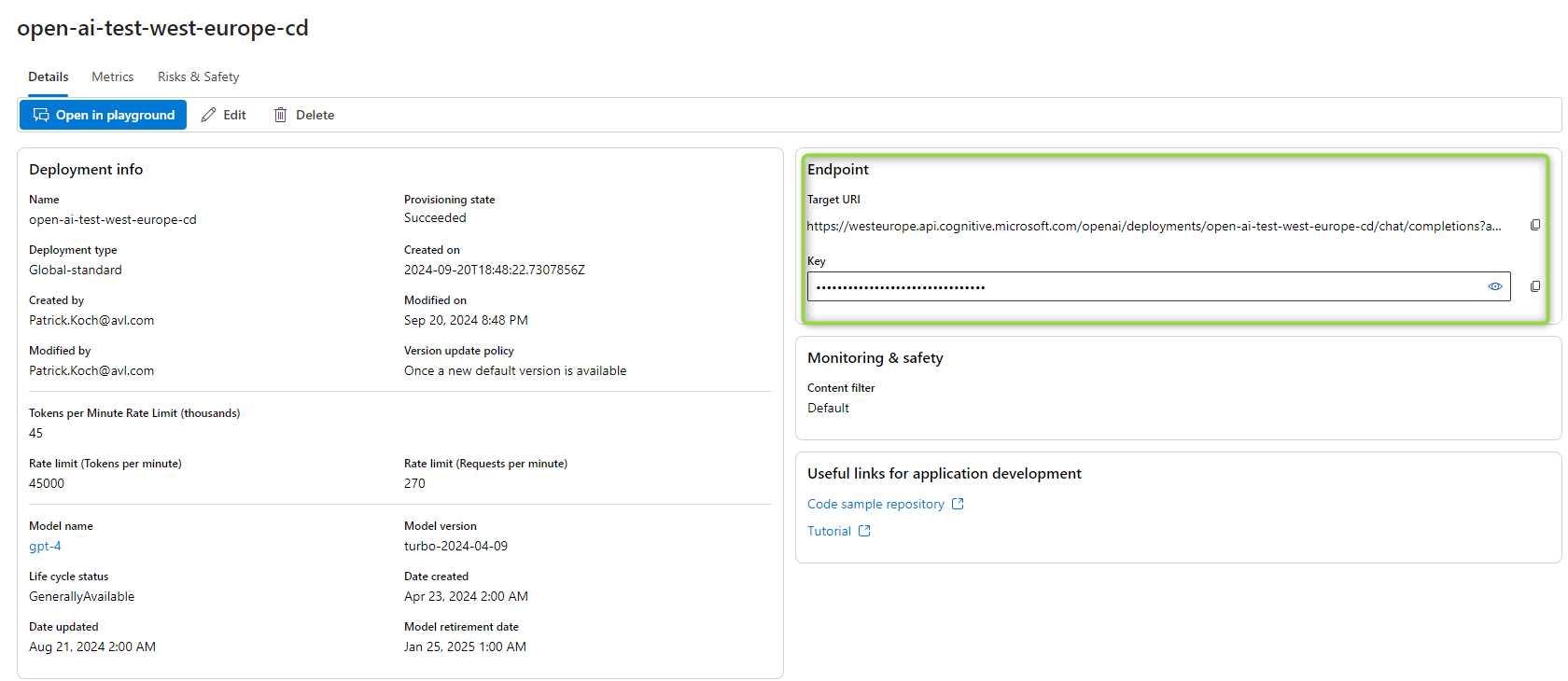

5.1 Get the endpoint credentials

You need to get the following information about the endpoints to establish a connection to the service:

- the API Key

- the Target URI

Explore the details of the deployment to find that information:

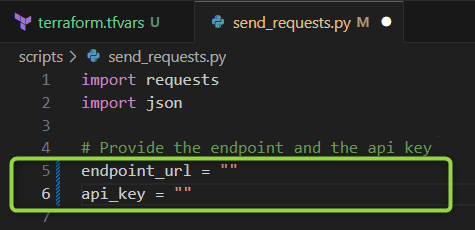

5.2 Use a Python script to provide your prompts

Insert the values of the “API Key” and the “Target URI” in lines 5, and 6 of the file “/scripts/send_requests.py”:

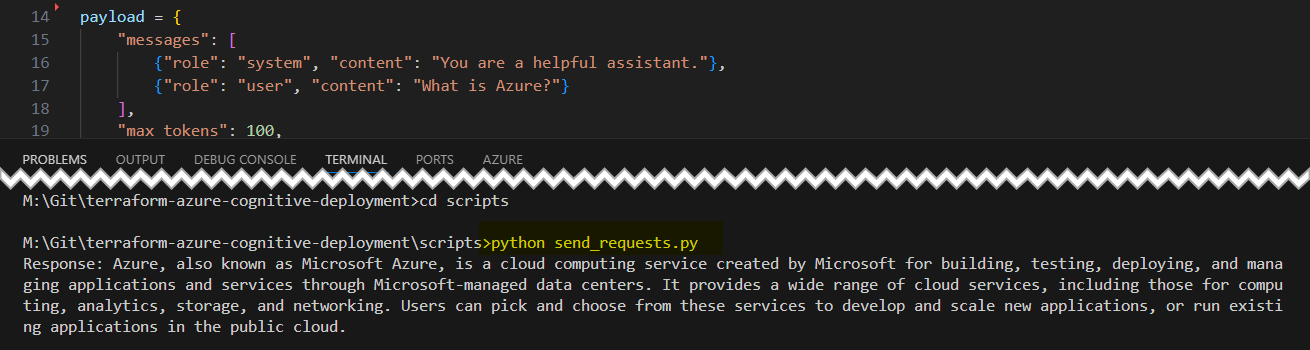

Provide a prompt of your choice in the “send_requests.py” file in line 16. After that, run the file using Phyton. This leads to a response, in my example it is about an explanation about Azure:

If you want to increase the amount of words, you would like to have in the response, then increase the number of tokens for the next deployment:

cognitive_deployment_sku_capacity = 45

Reference: learn.microsoft.com - OpenAI - Key Concepts

6. Destroy the resources using Terraform

If you want to destroy the provisioned resources, also taking the allocated costs into account of course, then run the following command:

terraform destroy

7. Conclusion

This Terraform configuration allows you to provision several resources on Azure, among other things:

- a cognitive account

- a cognitive deployment

You can parameterize the Terraform configuration using the “terraform.tfvars” file. Adapt the already provided values depending on the desired model, you would like to deploy. Conducting a set of Terraform commands will result in the provisioning. After getting the endpoint credentials and embedding them in the Python script, you are ready to start with prompting.

References

github.com/patkoch - terraform-azure-cognitive-deployment

developer.hashicorp.com - Terraform Tutorial

learn.microsoft.com - What is Azure OpenAI Service?

learn.microsoft.com - OpenAI - Key Concepts

learn.microsoft.com - Responsible use of AI overview

developer.hashicorp.com - Terraform CLI commands - init

developer.hashicorp.com - Terraform CLI commands - validate